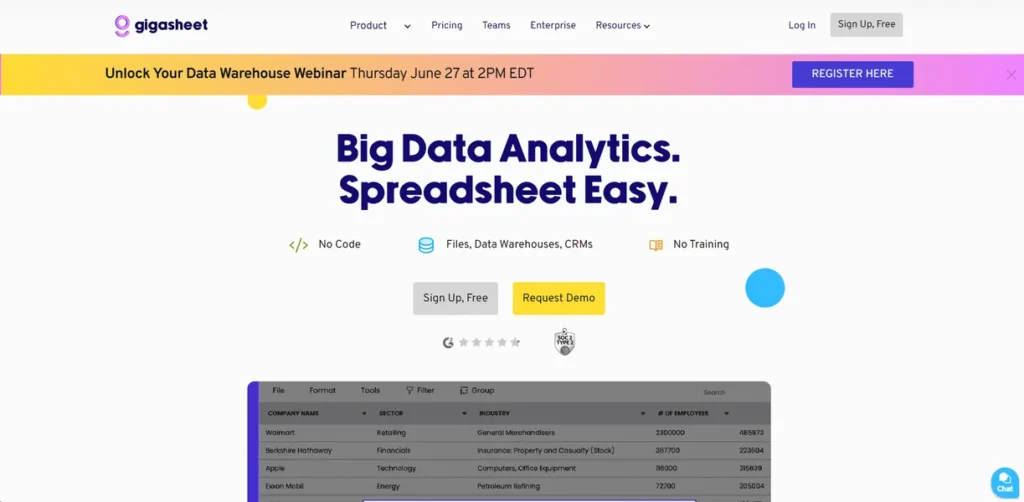

What is Gigasheet?

Gigasheet presents itself as a no-code platform for big data, but from an engineering perspective, it’s more accurately a high-level abstraction layer over a powerful, distributed data processing engine. It provides a familiar spreadsheet UI as a front-end to handle datasets that would instantly crash traditional applications like Microsoft Excel or Google Sheets. By managing the underlying infrastructure for processing billions of rows, Gigasheet allows teams to perform large-scale data manipulation, cleaning, and analysis without the overhead of provisioning and managing a Spark cluster or a complex data warehouse. It serves as a pragmatic bridge between desktop tools and enterprise-grade data engineering stacks.

Key Features and How It Works

Gigasheet’s architecture is designed to offload heavy computation from the user’s machine to the cloud. Here’s a breakdown of its core components from a technical standpoint:

- Big Data Compatibility: The platform’s core value is its ability to ingest and process massive files—up to billions of rows. It sidesteps the local memory constraints of desktop software by handling all operations on the server side, making it a viable tool for analyzing things like extensive server logs, massive CSV exports from production databases, or comprehensive cybersecurity threat feeds.

- No-Code Analysis Engine: Gigasheet provides a graphical user interface for common data operations like filtering, grouping, joining, and aggregation. For developers, this functions as a rapid prototyping tool, allowing for quick exploration and validation of datasets before committing to writing complex SQL queries or Python scripts.

- Automated Workflows: Think of Gigasheet’s Automated Workflows as a CI/CD pipeline for your data. You define a series of steps—data cleaning, enrichment, filtering—and the platform automatically executes this ‘build’ whenever new data is pushed or a schedule is triggered. This ensures operational consistency and saves significant engineering time on repetitive data preparation tasks.

- API and Integrations: A critical feature for any serious technical evaluation is programmatic access. Gigasheet provides an API and integrates with cloud storage like Amazon S3 and data warehouses. This allows it to function as a component within a larger, automated data pipeline, rather than just a standalone, manual tool. Data can be programmatically pushed, processed, and pulled for use in other applications.

- Spreadsheet A.I.: This feature is essentially a library of pre-built data enrichment functions. It can perform tasks like WHOIS lookups on IP addresses, sentiment analysis on text, or extracting URLs, all without requiring the user to write code or hook into third-party APIs for each individual task.

Pros and Cons

From a developer’s viewpoint, the platform presents clear trade-offs.

Pros:

- Rapid Prototyping: Quickly investigate and clean massive datasets without writing boilerplate code, accelerating the initial stages of data analysis.

- Scalability Without DevOps: The platform abstracts away the complexity of managing distributed systems, offering immense scale without the associated infrastructure overhead.

- Team Empowerment: It enables non-technical stakeholders (marketing, sales, operations) to self-serve many of their own data requests, freeing up engineering resources.

- System Integration: A well-defined API means Gigasheet isn’t a data silo; it can be integrated as a processing step in a larger automated workflow.

Cons:

- Abstraction Limits: The convenience of a no-code interface comes at the cost of flexibility. Highly bespoke or complex data transformations may not be possible within the platform’s predefined functions.

- Performance Obfuscation: As a managed service, you have limited control over resource allocation. Debugging performance bottlenecks can be challenging since the underlying compute environment is a black box.

- Integration Gaps: While major cloud platforms are supported, connections to niche applications or proprietary internal systems will require custom development against the Gigasheet API.

Who Should Consider Gigasheet?

Gigasheet is architected for professionals who need to work with datasets that exceed the capacity of standard spreadsheets but who may not have the resources or need for a full data engineering team.

- Cyber Security Analysts: Ideal for rapidly searching, filtering, and cross-referencing terabytes of log data, threat intelligence feeds, and forensic evidence without specialized tools.

- Sales and Marketing Operations: Enables teams to enrich, segment, and clean enormous lead lists or customer data exports from various SaaS platforms without writing scripts.

- Data Scientists and Analysts: A powerful tool for initial data exploration, cleaning, and preparation (ETL) before moving the curated dataset into specialized environments like Jupyter or R for advanced modeling.

- Engineering Leads: Can be deployed as a tool to empower business teams, reducing the number of ad-hoc data pull requests that interrupt development sprints.

Pricing and Plans

Gigasheet operates on a freemium model, allowing users to test the platform’s core functionality before committing to a paid plan. The pricing structure is designed to scale with usage and team size.

- Free Plan: A limited free-forever plan is available, suitable for individuals and small-scale projects. It offers a great way to evaluate the platform’s capabilities with file size and usage caps.

- Pro Plan: Starting at $39 per user/month, this plan increases limits on file size, data storage, and provides access to more advanced features and integrations.

- Enterprise Solutions: Custom pricing is available for large organizations requiring enhanced security, dedicated support, higher API rate limits, and team management features.

For the most current and detailed pricing, please refer to the official Gigasheet website.

What makes Gigasheet great?

Gigasheet’s single most powerful feature is its ability to democratize petabyte-scale data analysis by abstracting away the underlying infrastructure complexity. It effectively solves the ‘too big for Excel’ problem without forcing users onto the steep learning curve of distributed computing frameworks or database administration. For a developer, its value lies not just in its UI but in its position as a serverless data processing utility. It can be leveraged as a fully managed, scalable component in a data stack, handling the heavy lifting of parsing, cleaning, and enriching massive files, accessible via a simple API call.

Frequently Asked Questions

- How does Gigasheet handle data security and privacy?

- Gigasheet employs standard enterprise-grade security measures, including data encryption in transit (TLS) and at rest (AES-256). For organizations with strict compliance needs, it’s recommended to consult their documentation on certifications like SOC 2 and data residency options available under Enterprise plans.

- Can I integrate Gigasheet into my existing automated data pipelines?

- Yes. The platform’s REST API is the primary mechanism for integration. You can programmatically upload files, trigger analysis workflows, and export the resulting data, allowing Gigasheet to act as a processing step within a larger, automated system orchestrated by tools like Airflow or custom scripts.

- What are the technical limitations on dataset size?

- Gigasheet claims to support files with up to billions of rows and hundreds of columns. In practice, performance depends on the complexity of the data and the operations being performed. It is architected for extremely long (many rows) datasets, which is a common bottleneck for in-memory tools.

- Is the ‘no-code’ interface a bottleneck for developers?

- It can be. The no-code UI is optimized for speed and accessibility for a specific set of common data operations. For highly specialized algorithms or complex, multi-stage transformations, it is not a replacement for Python or SQL. Developers should view it as a tool for rapid exploration and empowering business users, while using the API for integration.